Prompt Engineering for Developers: A Beginner’s Guide

Prompt engineering is quickly becoming a...

Microservices Sahi Hai (right thing to do) for your startup.

Microservices have become a buzzword in...

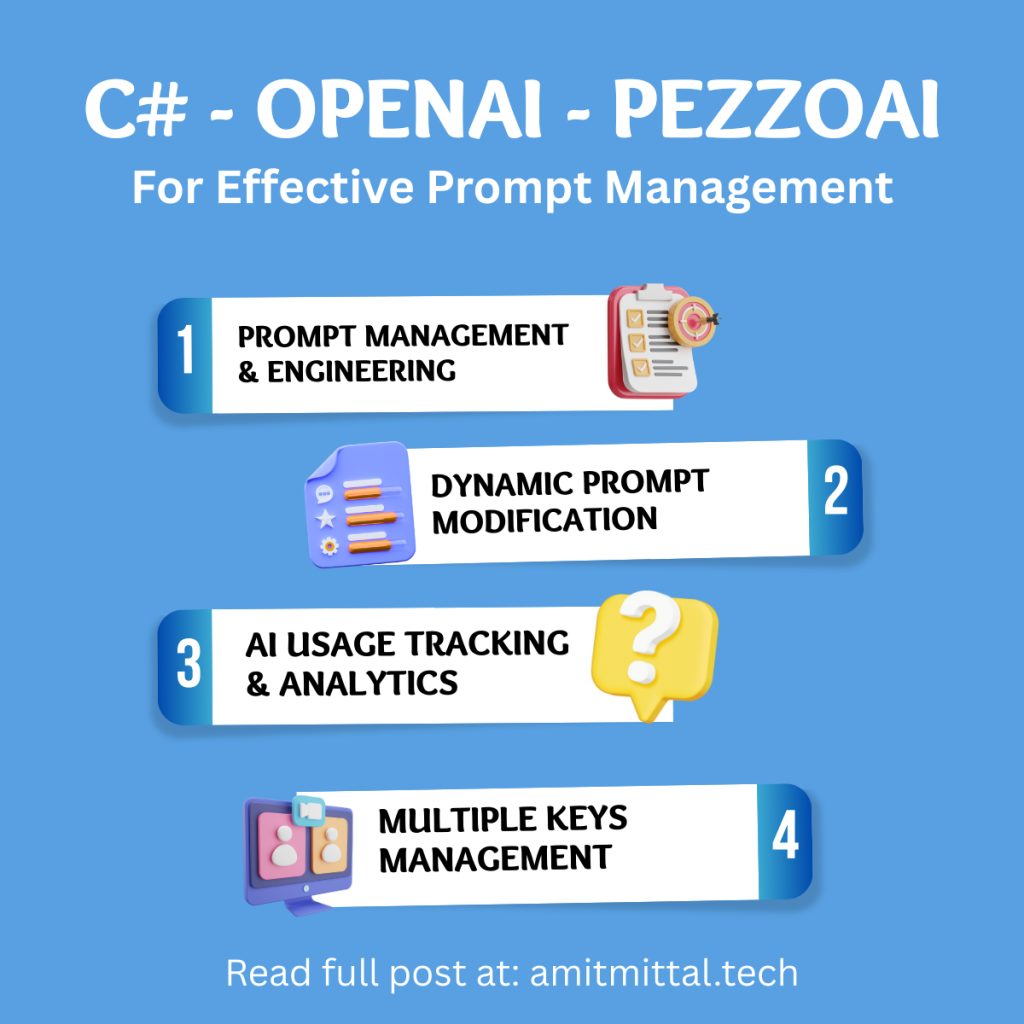

How to Set Custom Headers and Endpoint for Using PezzoAI Proxy with OpenAI’s Official C# SDK

When integrating OpenAI’s C# SDK into...

How I Solved Partial Data Streaming with LLMs in C# for JSON Responses

When integrating Large Language Models (LLMs)...